아래의 json string을 hive로 주물러봤다.

[{"product_id":220003038067,"timestamps":"1340321132000"},{"product_id":300003861266,"timestamps":"1340271857000"}]

하아~. 정말 거지같다.

get_json_object, json_tuple이라는 함수가 있지만, 위와 같은 형태의 json은 다루기 힘들었다.

구글에서 찾아보니 UDF로 해결하고 있었으며, 다음의 UDF를 찾았다.

jar 파일이 없다. 메이븐으로 빌드를 해야 하는데, 난 메이븐을 다뤄 본적이 한 번도 없다.

시도.

maven 설치

- http://maven.apache.org/download.cgi 에서 다운로드

- d:\apache-maven-3.5.0에 풀었다.

- MAVEN_HOME, JAVA_HOME 환경변수 설정

MAVEN_HOME = d:\apache-maven-3.5.0

JAVA_HOME = C:\Program Files\Java\jdk1.8.0_111

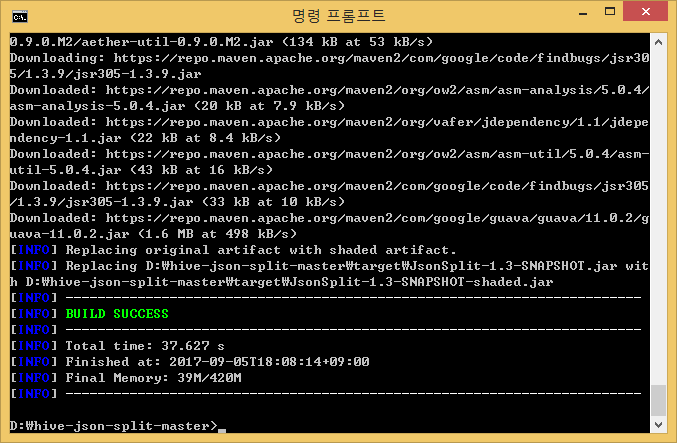

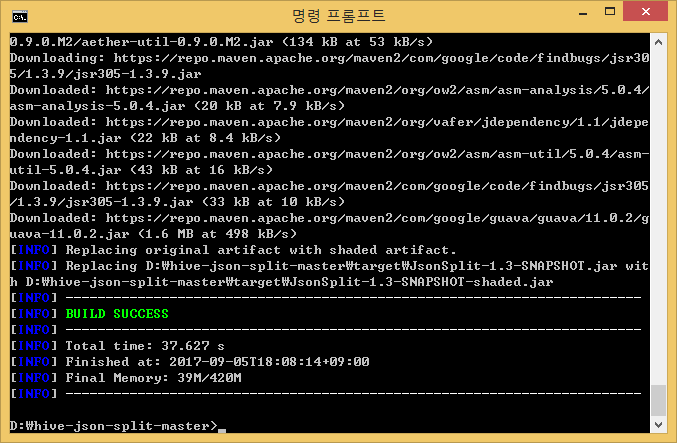

udf 빌드

- https://github.com/pythian/hive-json-split 에서 다운로드

- d:\hive-json-split-master에 풀었다.

d:

cd hive-json-split-master

D:\apache-maven-3.5.0\bin\mvn package

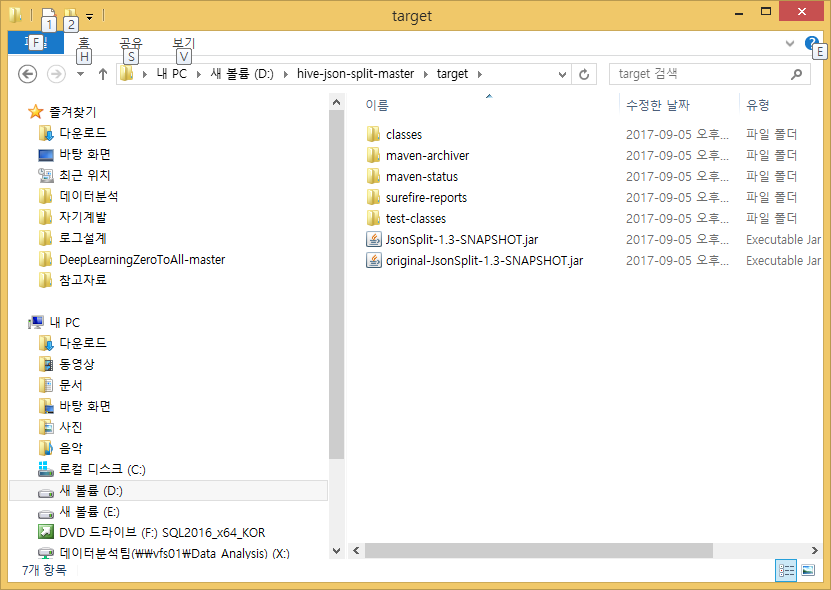

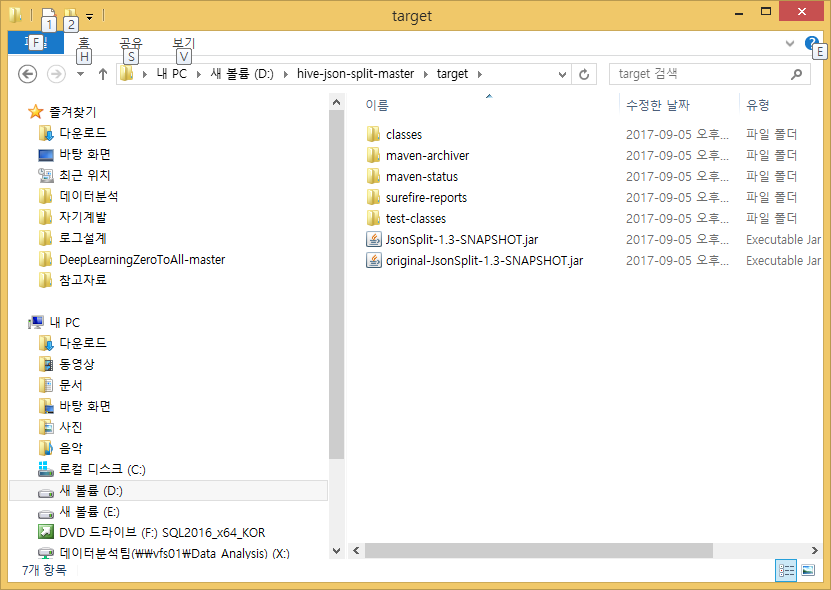

빌드가 성공하고나니 target이라는 디렉토리가 생기고, 아래와 같이 jar 파일 2개가 생기더라.

WinSCP를 이용해 hive가 돌어가는 서버에 JAR파일 2개를 /usr/hdp/current/hive-client/lib/ 에 복사 후 hive CLI에서 다음의 명령 수행

add jar /usr/hdp/current/hive-client/lib/JsonSplit-1.3-SNAPSHOT.jar;

create function json_split as 'com.pythian.hive.udf.JsonSplitUDF';

create function json_map as 'com.pythian.hive.udf.JsonMapUDF';

json test

create table json_test(json_string string);

insert into json_test values('[{"product_id":220003038067,"timestamps":"1340321132000"},{"product_id":300003861266,"timestamps":"1340271857000"}]');

set hive.cli.print.header=true;

select

b.col.row_id

, b.col.json_string

, c.*

from json_test a

lateral view explode(json_split(json_string)) b

lateral view json_tuple(b.col.json_string, 'product_id', 'timestamps') c as product_id, timestamps;

[root@sandbox ~]# hive

log4j:WARN No such property [maxFileSize] in org.apache.log4j.DailyRollingFileAppender.

Logging initialized using configuration in file:/etc/hive/2.6.1.0-129/0/hive-log4j.properties

hive> set hive.cli.print.header=true;

hive> select

> b.col.row_id

> , b.col.json_string

> , c.*

> from json_test a

> lateral view outer explode(json_split(json_string)) b

> lateral view json_tuple(b.col.json_string, 'product_id', 'timestamps') c as product_id, timestamps;

OK

row_id json_string c.product_id c.timestamps

0 {"product_id":220003038067,"timestamps":"1340321132000"} 220003038067 1340321132000

1 {"product_id":300003861266,"timestamps":"1340271857000"} 300003861266 1340271857000

Time taken: 2.067 seconds, Fetched: 2 row(s)

hive>

이 UDF보다 더 좋은 솔루션을 찾아 보았으나 UDF만한 것이 없다는 결론을 얻는데 약 5시간 걸렸다.

세션 열때마다 add jar /usr/hdp/current/hive-client/lib/JsonSplit-1.3-SNAPSHOT.jar;를 해야하니까 permanent function을 만드는 것이 좋겠다.

hdfs에 jar 파일을 올리고

hadoop fs -put JsonSplit-1.3-SNAPSHOT.jar ;

hive 쉘에서..

create function json_split as 'com.pythian.hive.udf.JsonSplitUDF' using JAR 'hdfs://localhost:8020/user/cloudera/JsonSplit-1.3-SNAPSHOT.jar';

create function json_map as 'com.pythian.hive.udf.JsonMapUDF' using JAR 'hdfs://localhost:8020/user/cloudera/JsonSplit-1.3-SNAPSHOT.jar';

os 쉘에서..

sudo service hive-server2 restart

json_map 함수는 아래와 같이 써먹는다.

--이건 sql server의 openjson과 유사

select explode(json_map(json_string))

from json_test

select json_map(b.col.json_string)['product_id']

from json_test a

lateral view outer explode(json_split(json_string)) b

다른 방법 #

이게 빠르다. 하지만 귀찮다.

select b.*

from json_test a

lateral view outer posexplode(split(regexp_replace(substr(json_string, 2, length(json_string)-2), '},\\{', '},,,,{'), ',,,,')) b

select b.*

from json_test a

lateral view explode(split(regexp_replace(regexp_extract(a.json_string,'^\\[(.+)\\]$',1),'\\}\\,\\{', '\\}\\|\\|\\{'),'\\|\\|')) b